Unraveling The Legal Landscape Of Deepfake Porn: Protecting Women And Addressing The Gaps

Deepfakes. You might not be familiar with the term, but chances are you've come across them on social media. Picture this: iconic celebrities and renowned actors seamlessly transplanted into diverse scenarios and appearances, like Elon Musk as Miley Cyrus. While these digitally manipulated images may seem humorous, the tool has quickly taken a nasty turn to deepfake nonconsensual pornography.

The rapid escalation of the use of deepfakes to create pornography of unsuspecting women has left a gaping hole in the legal landscape, leaving victims unable to press legal charges or force the removal of deepfake pornography. States are scrambling to catch up. Here is what you need to know about the current legal protections and gaps against deepfake pornography.

What Is Deepfake Pornography?

Deepfakes are digitally-created images, recordings, or videos that are altered or manipulated to portray someone doing or saying something they have not done or said. The term “deepfake” first appeared in 2017 when a Reddit user with that username began posting pornography using face-swapping technology on celebrities, all thanks to a slew of high-quality and readily available images on the internet.

Since then, the use and abuse of deepfake technology has skyrocketed and morphed into a platform that extorts women from all walks of life using a handful of photos that are easily accessible online. If you have a few images of yourself on the internet, you are at risk of being exploited in deepfake pornography. Think you are immune from attacks with private social accounts? Keep in mind that 80% of rapes are committed by an attacker known by the victim. It's likely that a lot of deepfake pornography creators have access to photos of the person that they’re exploiting. Take the now-defunct app DeepNude, by which users flooded Reddit streams with photographs of normal and unsuspecting women they want to “nudify” – female co-workers, friends, classmates, exes, neighbors, even strangers.

What the Legal Landscape Looks Like

The federal government has been slow to enact legislation against deepfake pornography thanks to its gridlocks. While most states have laws against nonconsensual pornography and revenge pornography, they do not include legal language related to deepfake pornography due to its newness. However, with the rise of AI and the vices that come with it, states have become more forthcoming in criminalizing deepfake pornography.

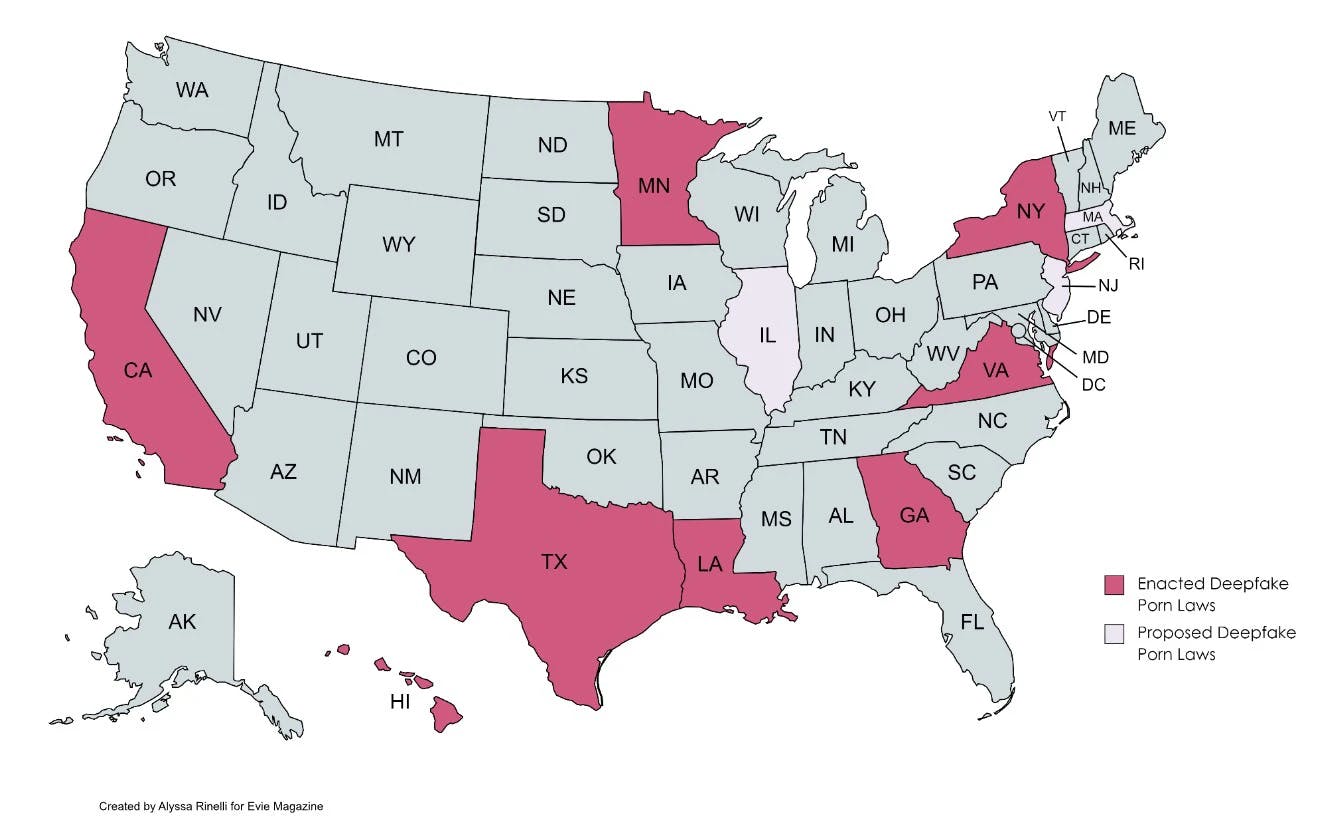

In 2019, California, Texas, and Virginia became the first states to ban deepfake pornography. Today, with AI becoming more mainstream, pressure is building to enact similar legislation across other states and even nationally. Some states have already followed suit by passing deepfake pornography legislation.

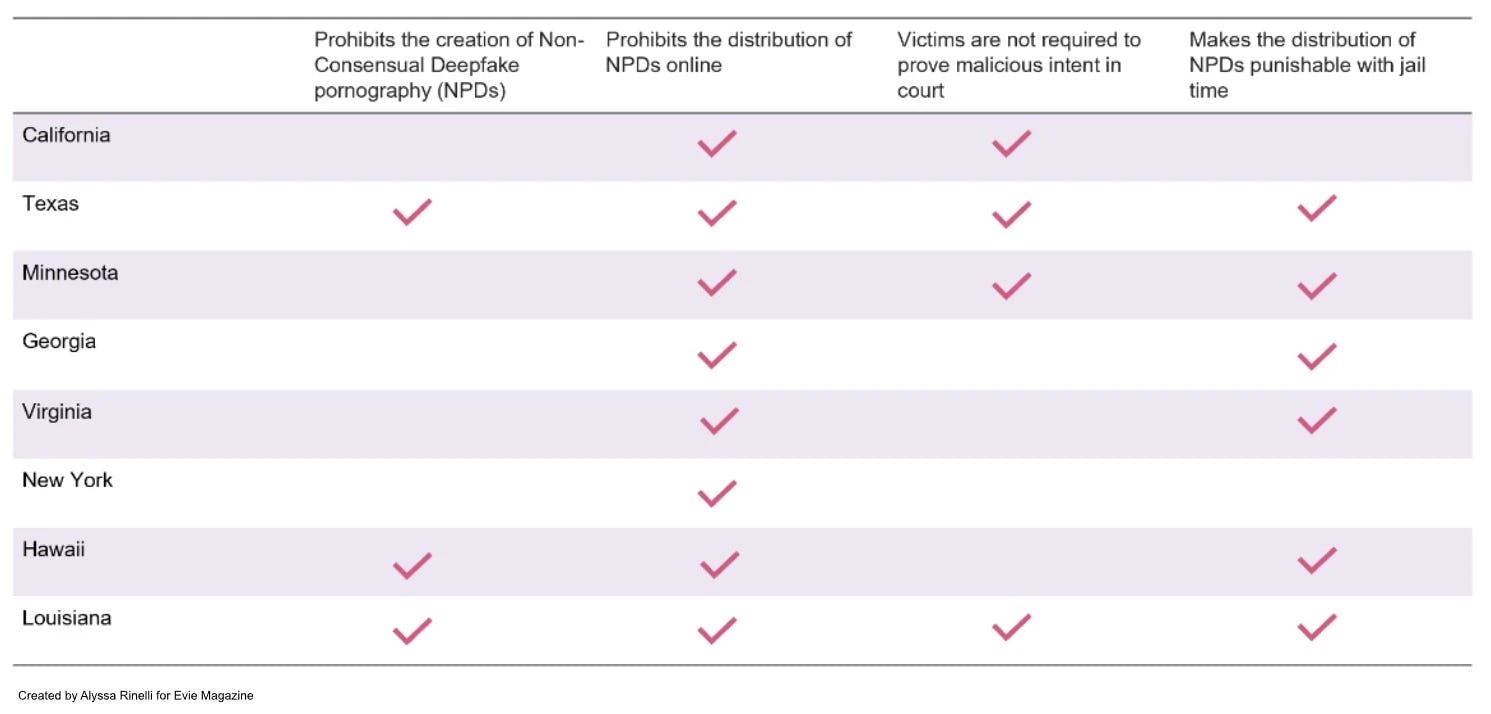

There are two distinct segments of deepfake pornography legislation in the states that have enacted it. The first segment makes the act a criminal violation. The states in this segment include Hawaii, Texas, Virginia, Minnesota, Louisiana, and Georgia. The second segment makes the act subject to civil lawsuits. The states in this segment are California and New York.

The distribution of nonconsensual deepfake pornography as a criminal violation requires the victim to lay out a case that proves malicious intent. If malicious intent is proven, the perpetrator could face varying jail times for criminal charges. The case to prove malicious intent regarding deepfake pornography will have varying requirements from state to state.

Civil penalties have a lower threshold to prove guilt, meaning the burden to prove malicious intent is lower. Instead, a civil lawsuit is filed in civil court. This involves the individual, or plaintiff, who has fallen victim to deepfake pornography filing a complaint against the individual who created or circulated deepfake pornography. The plaintiff must show that they were harmed in some way by the deepfake pornography. Even though the victim would not have to prove malicious intent, the cost of a civil lawsuit is higher. In a civil case, you not only have to pay attorney fees, you must pay the necessary filing fees, copying fees, expert witness fees, court reporter fees, transcripts costs, and other extraordinary costs that may arise. While you may be able to recuperate the fees if you win at trial, it’s still a hefty burden to pay upfront for a case with unknown outcomes.

The Gaps in Legal Coverage

Despite coverage across many American states, women are still finding they have little to no legal avenue for deepfake pornography attacks. Earlier this year, popular streamer QTCinderella fell victim to deepfake pornography. Many people sent her videos of herself, which started when a fellow streamer – and friend – accidentally left the tab open to a deepfake pornography website when live streaming. QTCinderella responded by saying that “it should not be a part of my job to have to pay money to get this stuff taken down,” further adding that she would sue the website creator. QTCindellera later found that her case had no legal standing.

Illinois, New Jersey, and Massachusetts have drafted legislation prohibiting the distribution of deepfake pornography. While the laws have not yet passed, the introduction of the legislation shows the growing concern about deepfake pornography.

Federal Gaps

Despite the damage deepfake pornography can inflict on a woman, there is no federal deepfake pornography legislation. Federal U.S. lawmakers have shown little interest in passing deepfake legislation outside of deepfakes that could cause political damage.

Congressman Joe Morelle (D-NY) recently re-introduced legislation to “protect the right to privacy online amid a rise of artificial intelligence and digitally-manipulated content,” titled Preventing Deepfakes of Intimate Images Act. Morelle had previously introduced this bill, but it did not advance.

Last year, President Joe Biden reauthorized the “Violence Against Women Act,” which allows women affected by the nonconsensual disclosure of intimate images to sue in civil court, but the bill did not include language on deepfake pornography.

What Can You Do To Protect Yourself Today?

Today, the legal landscape is limited, but there are other ways to limit your exposure to deepfake pornography attacks. Limiting publicly available images by enhancing privacy tools, like restricting who can see your account and controlling who can tag you in photos, can help prevent others from associating your face with manipulated content.

If you don’t want to privatize your social channels, you can consider watermarking photos with an inconspicuous mark to identify you as the owner. It won’t prevent deepfake creation, but it can make it more difficult for attackers to use your image without detection. You can also periodically conduct reverse image searches on search engines to see where your photos are used online. This action can help you identify instances of potential misuse. If something is found, report the misuse to the platform or website hosting the content. If you fall under the legal protection laws as they exist today, you can consider legal action against those who share your images for malicious purposes. Remember, you can not control what others are going to do on the internet, but you can limit your exposure.

If you think you have been affected by deepfake pornography, consult with legal professionals to understand your options. This is not legal advice.

Support our cause and help women reclaim their femininity by subscribing today.